[ad_1]

On the technological frontier, the evolution of artificial intelligence (AI) continues to spur extraordinary innovations. Yet, it simultaneously unfolds a narrative infused with apprehensions about the extinction of humanity. AI visionaries such as Sam Altman, the spearhead of OpenAI, and Geoffrey Hinton, universally recognized as the “godfather” of AI, have explicitly articulated this fear.

Unveiling the potential catastrophe, an open letter from the Center for AI Safety, endorsed by over 300 esteemed signatories, has brought to light the existential threat that AI presents. However, how this human-made wonder could become humanity’s undoing remains somewhat enigmatic.

AI Poses Risk of Human Extinction

Artificial intelligence could carve out a multitude of avenues to society-scale risks, according to Dan Hendrycks, the director of the Center for AI Safety. The misuse of AI by malign entities presents one such scenario.

“There’s a very common misconception, even in the AI community, that there only are a handful of doomers. But, in fact, many people privately would express concerns about these things,” said Hendrycks.

Imagine malevolent forces harnessing AI to construct bioweapons with lethality surpassing natural pandemics. Another example is launching rogue AI with intentions of widespread harm.

If an AI system is endowed with sufficient intelligence or capability, it could wreak havoc across society.

“Malicious actors could intentionally release rogue AI that actively attempts to harm humanity,” added Hendrycks.

Still, this is not only a short-term threat that concerns experts. As AI permeates various aspects of the economy, relinquishing control to technology could potentially lead to long-term issues.

This dependency on AI could render “shutting them down” disruptive and conceivably unattainable. Consequently, risking humanity’s hold over its future.

Misuse and Its Far-Reaching Implications

As Sam Altman cautioned, AI’s ability to create convincing text, images, and videos can lead to significant problems. Indeed, he believes that “if this technology goes wrong, it can go quite wrong.”

Take, for instance, a counterfeit image falsely depicting a massive explosion near the Pentagon that was circulated on social media. It led to a temporary slump in the stock market as many social media accounts, including several verified ones, disseminated the deceptive photo in mere minutes, heightening the disarray.

Such misuse of AI points to the technology’s potential for disseminating misinformation and disrupting societal harmony. Oxford’s Institute for Ethics in AI senior research associate Elizabeth Renieris asserted that AI can “drive an exponential increase in the volume and spread of misinformation, thereby fracturing reality and eroding the public trust.”

Another worrying trend is the emergence of “hallucinatory” AI. This is an unsettling phenomenon where AI spews erroneous yet seemingly plausible information.

This flaw, showcased in a recent incident involving ChatGPT, could challenge the credibility of companies utilizing AI and further perpetuate the spread of misinformation.

Erosion of Jobs and Explosion of Inequality

The swift adoption of AI across various industries casts a long, worrisome shadow over the job market. As the technology evolves, the potential elimination of millions of jobs has become a pressing concern.

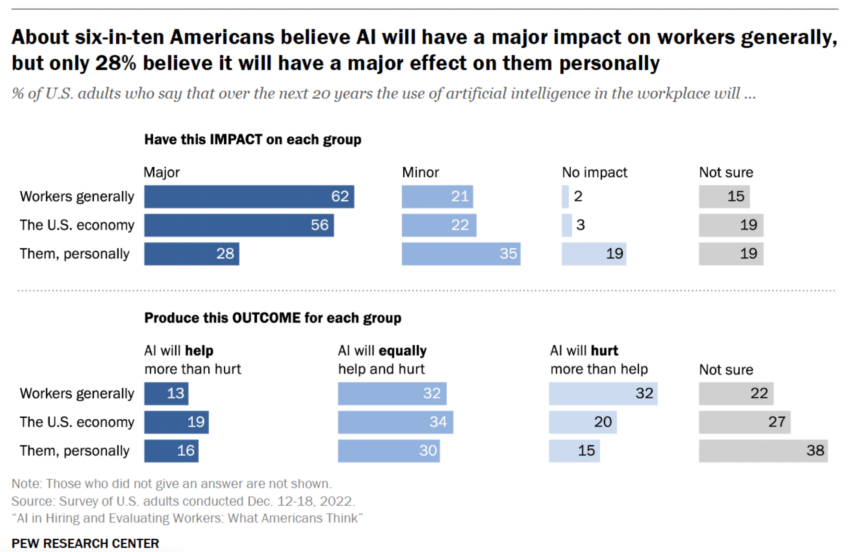

A recent survey revealed that six-in-ten Americans believe using AI in the workplace will significantly affect workers in the next 20 years. Around 28% of the respondents think using the technology will affect them personally, and another 15% believe that “AI would hurt more than help.”

A surge in automated decision-making may contribute to increasing bias, discrimination, and exclusion. It may also foster an environment of inequality, particularly affecting those on the wrong side of the digital divide.

Moreover, a shift towards dependency on AI could result in an “enfeeblement” of humanity. This could be similar to the dystopian scenario in films like Wall-E.

The Centre for AI Safety noted the dominance of AI might progressively fall under the control of a limited number of entities. It may enable “regimes to enforce narrow values through pervasive surveillance and oppressive censorship.”

This bleak future vision highlights the potential risks associated with AI and underlines the need for stringent regulation and control.

The Call for AI Regulation

The gravity of these concerns has led industry leaders to advocate for tighter AI regulations. This call for government intervention echoes the growing consensus that the development and deployment of AI should be carefully managed to prevent misuse and unintended societal disruption.

AI has the potential to be a boon or a bane, depending on how it is handled. It is crucial to foster a global conversation about mitigating the risks while reaping the benefits of this powerful technology.

UK Prime Minister Rishi Sunak maintains that AI has been instrumental in assisting individuals with paralysis to walk and uncover new antibiotics. Nonetheless, it is imperative that we ensure these processes are conducted securely and safely.

“People will be concerned by the reports that AI poses existential risks, like pandemics or nuclear wars. I want them to be reassured that the government is looking very carefully at this,” said Sunak.

The management of AI must become a global priority to prevent it from becoming a threat to human existence. To harness the benefits of AI while mitigating the risks of human extinction, it is important to tread cautiously and vigilantly. Governments must also embrace regulations, foster global cooperation, and invest in rigorous research.

Disclaimer

Following the Trust Project guidelines, this feature article presents opinions and perspectives from industry experts or individuals. BeInCrypto is dedicated to transparent reporting, but the views expressed in this article do not necessarily reflect those of BeInCrypto or its staff. Readers should verify information independently and consult with a professional before making decisions based on this content.

[ad_2]

Source link

Be the first to comment